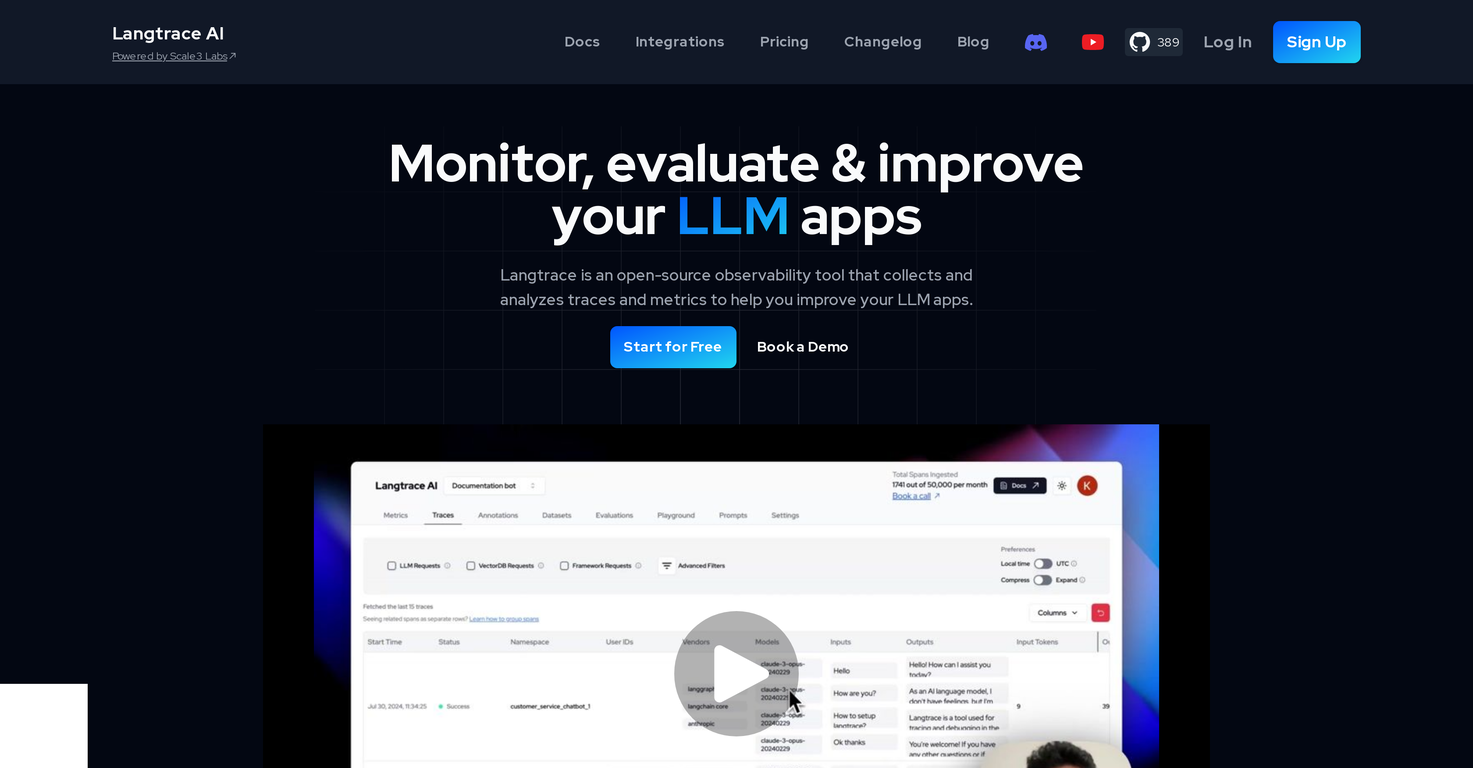

How does Langtrace AI monitor and optimize large language models?

Langtrace AI monitors and optimizes LLMs by collecting and analyzing traces and metrics. It offers full observability of the machine learning pipeline from end-to-end, creating 'golden datasets' with traced LLM interactions. These capabilities enable continuous testing and enhancement of AI applications, performance comparison across various models, and tracking of cost and latency on several levels.

What are the real-time insights provided by Langtrace AI?

Langtrace AI's real-time insights primarily consist of extensive performance metrics. These insights offer visibility into the entire machine learning process, allowing users to observe and manage the performance of their LLM applications, track cost and latency at different levels, and compare performance across a multitude of models.

What data is collected by Langtrace AI for optimization?

Langtrace AI collects traces and metrics to optimize LLMs. This data is gathered to provide insights into the AI applications, support the creation of golden datasets with traced LLM interactions, and facilitate continuous testing, enhancement, and performance comparison of different AI models.

How simple is the setup process of Langtrace AI?

The setup process of Langtrace AI is designed to be simple and non-intrusive. Users can access the Langtrace Software Development Kit (SDK) with just two lines of code, demonstrating the simplicity and accessibility of the setup process.

What is a Software Development Kit (SDK) and how is it related to Langtrace AI?

A Software Development Kit (SDK) is a collection of software tools and libraries that assist developers in creating applications. Related to Langtrace AI, its SDK serves as the primary tool for accessing and using the services of Langtrace, requiring only two lines of code for setup, demonstrating its convenience and ease of integration.

Which popular language models and frameworks are supported by Langtrace AI?

Langtrace AI supports a range of popular LLMs, frameworks, and vector databases. These include, but are not limited to, OpenAI, Google Gemini, and Anthropic.

How can Langtrace AI assist with the creation of golden datasets?

Langtrace AI assists with the creation of 'golden datasets' by annotating and tracing LLM interactions. This process enables the continuous testing and enhancement of AI applications, with the generated golden datasets serving as a benchmark for optimizing LLM performance.

How does Langtrace AI help in tracking the cost and latency at different levels?

Langtrace AI provides tools for tracking cost and latency at different levels such as project, model, and user levels. These metrics allow users to monitor and manage the efficiency of their AI applications by assessing the usage costs and response times at various stages.

What does community-driven nature of Langtrace AI mean?

The community-driven nature of Langtrace AI refers to its open-source base, welcoming input and contributions from its user community. This supports the ongoing development and enhancement of the tool in a collaborative, competitive space.

What are the core capabilities of Langtrace AI?

The core capabilities of Langtrace AI encompass end-to-end observability of machine learning pipelines, creation of golden datasets through traced LLM interactions for constant testing and enhancement of AI applications, and the ability to compare performance across different models while tracking cost and latency at numerous levels.

How does Langtrace AI ensure end-to-end observability of a machine learning pipeline?

Langtrace AI ensures end-to-end observability of a machine learning pipeline by collecting and analyzing traces and metrics. It delivers comprehensive visibility into the full process, enabling users to monitor, evaluate, and enhance the performance of their LLM applications, funded by its capability to compare performance across different models, and track cost and latency at multiple levels.

What do you mean by 'traced LLM interactions'?

'Traced LLM interactions' refer to the process of logging and analyzing interchanges between a Large Language Model and its applications. Langtrace AI uses these traces to facilitate the creation of 'golden datasets' and subsequently, the continuous testing and improvement of AI applications.

What popular LLMs does Langtrace AI support?

Langtrace AI supports a variety of widely used Large Language Models (LLMs). Some notable ones among these include OpenAI, Google Gemini, and Anthropic.

How does Langtrace AI support continuous testing and enhancement of AI applications?

Langtrace AI supports continuous testing and enhancement of AI applications through its ability to make traceable LLM interactions and create 'golden datasets'. These capabilities allow for constant monitoring, evaluation, and optimization of LLM apps, ultimately improving the performance and cost-effectiveness of AI applications.

What are the performance metrics provided by Langtrace AI?

The performance metrics provided by Langtrace AI give a detailed view of the application's efficiency. These metrics cover a wide range, including tracing requests, detecting bottlenecks, optimizing performance, and tracking cost and latency at different levels such as project, model, and user levels.

Does Langtrace AI support OpenAI or Google Gemini?

Yes, Langtrace AI does support both OpenAI and Google Gemini, among others. These are part of a wider set of popular LLMs, frameworks, and vector databases that Langtrace AI is compatible with.

Can I compare model performance across different models using Langtrace AI?

Yes, you can compare model performance across different models using Langtrace AI. Its end-to-end observability tools and collection of traces and metrics facilitate the comparison of performance, providing a comprehensive platform for evaluating and optimizing AI applications.

How would you rate Langtrace AI?

Help other people by letting them know if this AI was useful.